5.10 Exercises

These exercises use AILog, a simple logical reasoning system that implements all of the reasoning discussed in this chapter. It is available from the book web site.

- Exercise 5.1:

- Suppose we want to be able to reason about an electric kettle plugged

into a power outlet for the electrical domain. Suppose a kettle must be plugged into a

working power outlet, it must be turned on, and it must be filled with

water, in order to heat.

Using AILog syntax, write axioms that let the system determine whether kettles are heating. AILog code for the electrical environment is available from the web.

You must

- Exercise 5.2:

- Consider the domain of house plumbing represented in

the following diagram:

Figure 5.13: The plumbing domain

Figure 5.13: The plumbing domain

In this example, p1, p2, and p3 denote cold water pipes; t1, t2, and t3 denote taps; d1, d2, and d3 denote drainage pipes; shower denotes a shower; bath denotes a bath; sink denotes a sink; and floor denotes the floor. Figure 5.13 is intended to give the denotation for the symbols.

Suppose we have the following atoms:

- pressurized_pi is true if pipe pi has mains pressure in it.

- on_ti is true if tap ti is on.

- off_ti is true if tap ti is off.

- wet_b is true if b is wet (b is either the sink, bath or floor).

- flow_pi is true if water is flowing through pi.

- plugged_sink is true if the sink has the plug in.

- plugged_bath is true if the bath has the plug in.

- unplugged_sink is true if the sink does not have the plug in.

- unplugged_bath is true if the bath does not have the plug in.

A definite-clause axiomatization for how water can flow down drain d1 if taps t1 and t2 are on and the bath is unplugged is

pressurized_p1.

pressurized_p2 ←on_t1 ∧pressurized_p1.

flow_shower ←on_t2 ∧pressurized_p2.

wet_bath ←flow_shower.

flow_d2 ←wet_bath ∧unplugged_bath.

flow_d1 ←flow_d2.

on_t1.

on_t2.

unplugged_bath.- Finish the axiomatization for the sink in the same manner as the axiomatization for the bath. Test it in AILog.

- What information would you expect the user to be able to provide that the plumber, who is not at the house, cannot? Change the axiomatization so that questions about this information are asked of the user.

- Axiomatize how the floor is wet if the sink overflows or the bath overflows. They overflow if the plug is in and water is flowing in. You may invent new atomic propositions as long as you give their intended interpretation. (Assume that the taps and plugs have been in the same positions for one hour; you do not have to axiomatize the dynamics of turning on the taps and inserting and removing plugs.) Test it in AILog.

- Suppose a hot water system is installed to the left of tap t1. This has another tap in the pipe leading into it and supplies hot water to the shower and the sink (there are separate hot and cold water taps for each). Add this to your axiomatization. Give the denotation for all propositions you invent. Test it in AILog.

- Exercise 5.3:

- You are given the following knowledge base:

a ←b ∧c.

a ←e ∧f.

b ←d.

b ←f ∧h.

c ←e.

d ←h.

e.

f ←g.

g ←c.- Give a model of the knowledge base.

- Give an interpretation that is not a model of the knowledge base.

- Give two atoms that are logical consequences of the knowledge base.

- Give two atoms that are not logical consequences of the knowledge base.

- Exercise 5.4:

- You are given the knowledge base KB containing the following clauses:

a←b∧c.

b←d.

b←e.

c.

d←h.

e.

f←g∧b.

g←c∧k.

j←a∧b.- Show how the bottom-up proof procedure works for this example. Give all logical consequences of KB.

- f is not a logical consequence of KB. Give a model of KB in which f is false.

- a is a logical consequence of KB. Give a top-down derivation for the query ask a.

- Exercise 5.5:

- A bottom-up proof procedure can incorporate an ask-the-user mechanism by asking the user about every askable atom. How can a bottom-up proof procedure still guarantee proof of all (non-askable) atoms that are a logical consequence of a definite-clause knowledge base without asking the user about every askable atom?

- Exercise 5.6:

- This question explores how having an explicit semantics can

be used to debug programs. The file

elect_bug2.ailin the AILog distribution on the book web site is an axiomatization of the electrical wiring domain of Figure 5.2, but it contains a buggy clause (one that is false in the intended interpretation shown in the figure). The aim of this exercise is to use AILog to find the buggy clause, given the denotation of the symbols given in Example 5.5. To find the buggy rule, you won't even need to look at the knowledge base! (You can look at the knowledge base to find the buggy clause if you like, but that won't help you in this exercise.) All you must know is the meaning of the symbols in the program and what is true in the intended interpretation.The query lit_l1 can be proved, but it is false in the intended interpretation. Use the how questions of AILog to find a clause whose head is false in the intended interpretation and whose body is true. This is a buggy rule.

- Exercise 5.7:

-

Consider the following knowledge base and assumables aimed to explain

why people are acting suspiciously:

goto_forest ←walking.

get_gun←hunting.

goto_forest←hunting .

get_gun←robbing.

goto_bank←robbing.

goto_bank←banking.

fill_withdrawal_form ←banking.

false ←banking ∧robbing.

false ←wearing_good_shoes∧goto_forest.

assumable walking,hunting,robbing,banking.- Suppose get_gun is observed. What are all of the minimal explanations for this observation?

- Suppose get_gun∧goto_bank is observed. What are all of the minimal explanations for this observation?

- Is there something that could be observed to remove one of these as a minimal explanation? What must be added to be able to explain this?

- What are the minimal explanations of goto_bank?

- What are the minimal explanations of goto_bank∧get_gun ∧fill_withdrawal_form?

- Exercise 5.8:

-

Suppose there

are four possible diseases a particular patient may have:

p, q, r, and s.

p causes spots.

q causes spots.

Fever could be caused by one (or more) of q, r, or s.

The patient has spots and fever. Suppose you have decided to use

abduction to diagnose this patient based on the symptoms.

- Show how to represent this knowledge using Horn clauses and assumables.

- Show how to diagnose this patient using abduction. Show clearly the query and the resulting answer(s).

- Suppose also that p and s cannot occur together. Show how that changes your knowledge base from part (a). Show how to diagnose the patient using abduction with the new knowledge base. Show clearly the query and the resulting answer(s).

- Exercise 5.9:

- Consider the following clauses and integrity constraints:

false←a∧b.

false←c.

a←d.

a←e.

b←d.

b←g.

b←h.

c←h.Suppose the assumables are {d,e,f,g,h,i}. What are the minimal conflicts?

- Exercise 5.10:

- Deep Space One

(http://nmp.jpl.nasa.gov/ds1/), a

spacecraft launched by NASA in October 1998, used AI technology

for its diagnosis and control. For more details, see Muscettola et al. (1998)

or

http://ic.arc.nasa.gov/projects/remote-agent/

(although these references are not necessary to complete this question).

Figure 5.14: Deep Space One engine design

Figure 5.14: Deep Space One engine design

Figure 5.14 depicts a part of the actual DS1 engine design. To achieve thrust in an engine, fuel and oxidizer must be injected. The whole design is highly redundant to ensure its operation even in the presence of multiple failures (mainly stuck or inoperative valves). Note that whether the valves are black or white, and whether or not they have a bar are irrelevant for this assignment.

Each valve can be okay (or not) and can be open (or not). The aim of this assignment is to axiomatize the domain so that we can do two tasks:

- Given an observation of the lack of thrust in an engine and given which valves are open, using consistency-based diagnosis, determine what could be wrong.

- Given the goal of having thrust and given the knowledge that some valves are okay, determine which valves should be opened.

For each of these tasks, you must think about what the clauses are in the knowledge base and what is assumable.

The atoms should be of the following forms:

- open_V is true if valve V is open. This the atoms should be open_v1, open_v2, and so on.

- ok_V is true if valve V is working properly.

- pressurized_V is true if the output of valve V is pressurized with gas. You should assume that pressurized_t1 and pressurized_t2 are true.

- thrust_E is true if engine E has thrust.

- thrust is true if no thrust exists in either engine.

- nothrust is true if there is no thrust.

To make this manageable, only write rules for the input into engine e1. Test your code using AILog on a number of examples.

- Exercise 5.11:

- Consider using abductive diagnosis on the problem

in the previous question.

Suppose the following:

- Valves can be open or closed. For some of them, we know if they are open or closed, and for some valves we do not know.

- A valve can be ok, in which case the gas will flow if the valve is open and not if it is closed; broken, in which case gas never flows; stuck, in which case gas flows independently of whether the valve is open or closed; or leaking, in which case gas flowing into the valve leaks out instead of flowing through.

- There are three gas sensors that can detect gas leaking (but not which gas); the first gas sensor detects gas from the rightmost valves (v1,..., v4), the second gas sensor detects gas from the center valves (v5,..., v12), and the third gas sensor detects gas from the leftmost valves (v13,..., v16).

- Axiomatize the domain so the system can explain thrust or no thrust in engine e1 and the presence of gas in one of the sensors. For example, it should be able to explain why e1 is thrusting. It should be able to explain why e1 is not thrusting and there is a gas detected by the third sensor.

- Test your axiomatization on some non-trivial examples.

- For some of the queries, many explanations exist. Suggest how the number of explanations could be reduced or managed so that the abductive diagnoses are more useful.

- Exercise 5.12:

- AILog has askables, which are atoms that are asked of the user,

and assumables, which are collected in an answer.

Imagine you are axiomatizing the wiring in your home and you have an axiomatization similar to that of Example 5.3. You are axiomatizing the domain for a new tenant who is going to sublet your home and may want to determine what may be going wrong with the wiring.

There are some atoms that you will know the rules for, some that the tenant will know, and some that neither will know. Divide the atomic propositions into these three categories, and suggest which should be made askable and which should be assumable. Show what the resulting interaction will look like under your division.

- Exercise 5.13:

- In this question, consider using integrity constraints and

consistency-based diagnosis in a purchasing agent that

interacts with various information sources on the web.

To answer a question, the purchasing agent will ask a number of the

information sources for facts. However, information sources are sometimes

wrong. It is useful to be able to automatically determine which

information sources may be wrong when a user gets conflicting information.

In this question we consider how integrity constraints and assumables can be used to determine what errors are present in different information sources.

In this question, we use meaningless symbols such as a, b, c, ..., but in a real domain there will be meaning associated with the symbols, such as a meaning "there is skiing in Hawaii" and z meaning "there is no skiing in Hawaii", or a meaning "butterflies do not eat anything" and z meaning "butterflies eat nectar". We will use meaningless symbols in this question because the computer does not have access to the meanings and must simply treat them as meaningless symbols.

Suppose the following information sources and associated information are provided:

- Source s1:

- Source s1 claims the following clauses are

true:

a ←h.

d ←c. - Source s2:

- Source s2 claims the following clauses are

true:

e ←d.

f ←k.

z ←g.

j. - Source s3:

- Source s3 claims the following clause is

true:

h ←d.

- Source s4:

- Source s4 claims the following clauses are

true:

a ←b ∧e.

b ←c. - Source s5:

- Source s5 claims the following clause is

true:

g ←f ∧j.

- Yourself:

- Suppose that you know that the following clauses are

true:

false ←a ∧z.

c.

k.

Not every source can be believed, because together they produce a contradiction.

- Code the knowledge provided by the users into AILog using assumables. To use a clause provided by one of the sources, you must assume that the source is reliable.

- Use the program to find the conflicts about what sources are reliable. (To find conflicts you can just ask false.)

- Suppose you would like to assume that as few sources as possible are unreliable. Which single source, if it was unreliable, could account for a contradiction (assuming all other sources were reliable)?

- Which pairs of sources could account for a contradiction (assuming all other sources are reliable) such that no single one of them could account for the contradiction?

- Exercise 5.14:

-

Suppose you have a job at a company that is building online teaching

tools. Because you have taken an AI course,

your boss wants to know your opinion on various options under

consideration.

They are planning on building an intelligent tutoring system for teaching elementary physics (e.g., mechanics and electromagnetism). One of the things that the system must do is to diagnose errors that a student may be making.

For each of the following, answer the explicit questions and use proper English. Answering parts not asked or giving more than one answer when only one is asked for will annoy the boss. The boss also does not like jargon, so please use straightforward English.

The boss has heard of consistency-based diagnosis and abductive diagnosis but wants to know what they involve in the context of building an intelligent tutoring system for teaching elementary physics.

- Explain what knowledge (about physics and about students) is requried for consistency-based diagnosis.

- Explain what knowledge (about physics and about students) is requried for abductive diagnosis.

- What is the main advantage of using abductive diagnosis over consistency-based diagnosis in this domain?

- What is the main advantage of consistency-based diagnosis over abductive diagnosis in this domain?

- Exercise 5.15:

- Consider the bottom-up negation-as-failure proof procedure of Figure 5.11. Suppose we want to allow for incremental addition and deletion of clauses. How does C change as a clause is added? How does C change if a clause is removed?

- Exercise 5.16:

- Suppose you are implementing a bottom-up Horn clause explanation reasoner and you want to incrementally add clauses or assumables. When a clause is added, how are the minimal explanations affected? When an assumable is added, how are the minimal explanations affected?

- Exercise 5.17:

-

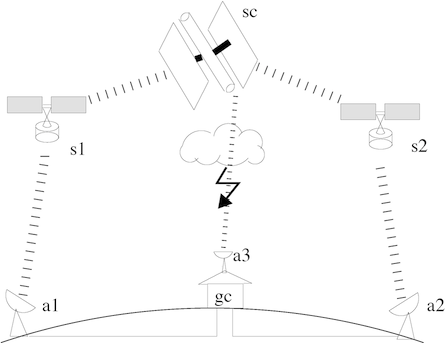

Figure 5.15 shows a simplified redundant communication

network between an unmanned spacecraft (sc) and a ground control center

(gc). There are two indirect high-bandwidth (high-gain) links that

are relayed through satellites (s1, s2) to different ground antennae

(a1, a2). Furthermore, there is a direct, low-bandwidth (low-gain)

link between the ground control center's antenna (a3) and the

spacecraft. The low-gain link is affected by atmospheric

disturbances - it works if there are no disturbances (no_dist) - and the

spacecraft's low-gain transmitter (sc_lg) and antenna 3 are

okay. The high-gain links always work if the spacecraft's high-gain

transmitter (sc_hg), the satellites' antennae (s1_ant, s2_ant), the

satellites' transmitters (s1_trans, s2_trans), and the ground

antennae (a1, a2) are okay.

To keep matters simple, we consider only messages from the spacecraft going through these channels to the ground control center.

Figure 5.15: A space communication network

Figure 5.15: A space communication networkThe following knowledge base formalizes the part of the communication network we are interested in:

send_signal_lg_sc ←ok_sc_lg ∧alive_sc.

send_signal_hg_sc ←ok_sc_hg ∧alive_sc.

get_signal_s1 ←send_signal_hg_sc ∧ok_s1_ant.

get_signal_s2 ←send_signal_hg_sc ∧ok_s2_ant.

send_signal_s1 ←get_signal_s1 ∧ok_s1_trans.

send_signal_s2 ←get_signal_s2 ∧ok_s2_trans.

get_signal_gc ←send_signal_s1 ∧ok_a1.

get_signal_gc ←send_signal_s2 ∧ok_a2.

get_signal_gc ←send_signal_lg_sc ∧ok_a3 ∧no_dist.Ground control is worried, because it has not received a signal from the spacecraft (no_signal_gc). It knows for sure that all ground antennae are okay (i.e., ok_a1, ok_a2, and ok_a3) and satellite s1's transmitter is ok (ok_s1_trans). It is not sure about the state of the spacecraft, its transmitters, the satellites' antennae, s2's transmitter, and atmospheric disturbances.

- Specify a set of assumables and an integrity constraint that model the situation.

- Using the assumables and the integrity constraints from part (a), what is the set of minimal conflicts?

- What is the consistency-based diagnosis for the given situation? In other words, what are the possible combinations of violated assumptions that could account for why the control center cannot receive a signal from the spacecraft?

- Exercise 5.18:

- Explain why NASA may want to use abduction rather than consistency-based diagnosis for the domain of Exercise 5.17.

- Suppose that an atmospheric disturbance dist could produce static or no signal in the low-bandwidth signal. To receive the static, antenna a3 and the spacecraft's low-bandwidth transmitter sc_lg must be working. If a3 or sc_lg are not working or sc is dead, there is no signal. What rules and assumables must be added to the knowledge base of Exercise 5.17 so that we can explain the possible observations no_signal_gc, get_signal_gc, or static_gc? You may ignore the high-bandwidth links. You can invent any symbols you need.